|

"TopCoder is interested in incorporating a code coverage tool in the component development process. The reason is to measure how well the unit test cases are covering all of the code. The ultimate goal is to improve the quality of our components."

That's how the idea started in the forums and, as usual, it stirred up a chorus of opinions. TopCoder members offered tool proposals, interesting views and concerns, along with the usual, often unspoken questions: is this a good idea? Is it a bad idea? Will it really help us? Well, code coverage isn't necessarily good or bad, but it is a necessary element of a modern test-driven software development paradigm.

Based on the users' proposals from the forum, TopCoder started to look for the right code coverage tools - ideally ones that were small and versatile, with a reasonable learning curve. After identifying a few likely candidates for both Java and .NET, TopCoder gathered a few coders to test drive the tools. This article will provide a summary of those results.

1. Code Coverage

What is code coverage after all? Let's try defining an outline for the process:

- run a code coverage tool (analyzer) to find out the areas of your code not covered by a set of test cases

- determine a quantitative measure, which is an indirect measure of quality

- create additional tests to increase coverage and reach a certain threshold, at which you can consider your code "well tested"

- identify redundant test cases

Code coverage analysis a.k.a. test coverage analysis is all about how thoroughly your tests exercise your code base and, as mentioned above, can be considered an indirect measure of quality - indirect because it gauges the degree to which the tests cover the code, rather than directly measuring the quality of the code itself. Code coverage can be viewed as white-box or structural testing, because the "assertions" are made against the internals of classes, not against the system's interfaces or contracts. It helps to identify paths in your program that are not being tested, and is most useful and powerful when testing logic-intensive applications.

Here is a summary of the most common "measures" used in code coverage analysis - for more on the subject, review this nice introduction.

- Basic measures

- statement coverage (line coverage)

- basic block coverage

- decision coverage (branch coverage)

- multiple condition coverage

- path coverage

- Other measures

- function coverage (method coverage)

- call coverage

- data flow coverage

- loop coverage

- race coverage

- relational operator coverage

- table coverage

2. The tools

Four tools were chosen to be reviewed, EMMA and Cobertura for Java and NCover and TeamCoverage for .NET C#. These tools were reviewed over a set of TopCoder expectations and run against a set of representative components to see how they performed.

3. EMMA vs. Cobertura

For TopCoder Java component development we reviewed Vlad Roubtsov's EMMA and Mark Doliner's Cobertura (which is Spanish for coverage). First, let's look at a side-by-side comparison, then we'll point out some particular things about each tool with excerpts from the reviewers' comments.

| TopCoder Expectations | EMMA | Cobertura |

| JDK 1.4 support | Yes | Yes |

| JDK 1.5 support | Yes | Yes |

| Partial line coverage (i.e. corner case if entire method code will be put on single-line, will it still show valuable coverage details) |

Yes | Yes(to some extent) |

| Support for Ant (i.e. how hard will it be to integrate into Ant: exec vs. custom task ) | Yes(custom ant task) | Yes(custom ant task) |

| Support for JUnit integration (i.e. will it be able to execute JUnit tests and give some details about test-cases hitting method vs. % or lines of code covered by each test case) |

Yes(framework agnostic) | Yes(framework agnostic) |

| Support for programming access to coverage reports (i.e. XML or some other API to read coverage files in order to create combined report across several submissions) |

Yes(XML) | Yes(XML) |

| TXT reports (nice to have as current scorecards do not allow HTML and copying-pasting from HTML reports can mess up formatting) |

Yes | No |

| Licensing and pricing | Free (Common Public License) | Free (GPL; most of it) |

| Command Line Launch | Yes | Yes |

| Ease of use | Quite easy | Quite easy |

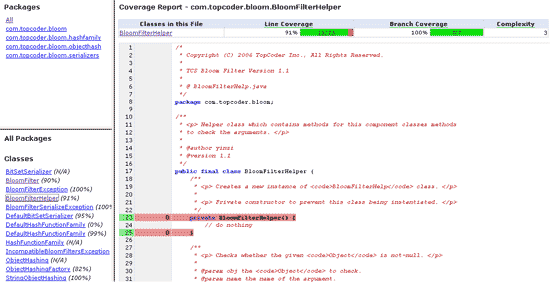

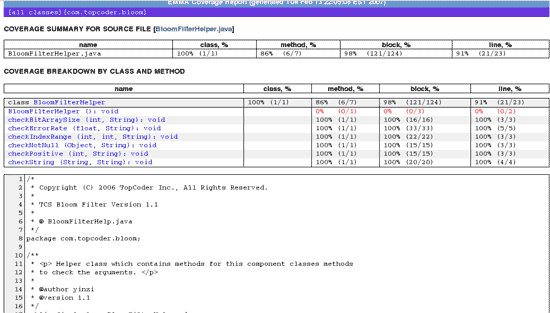

Cobertura uses line and block coverage measures and bytecode instrumenting to generate its coverage reports, including a twist with the McCabe cyclomatic complexity. Below is a sample report:

EMMA takes a more complex approach and supports the class, method, line, and basic block coverage measures. It can instrument classes for coverage either offline (before they are loaded) or on the fly (using an instrumenting application classloader) and also handles entire .jar files without needing to expand them.

From the usage point of view both tools are easy to use and set up. "I found Emma to be easy to use," wrote madking, in his review of EMMA. "All I had to do was insert a couple of lines (which were provided in the documentation) into ant's build.xml file and I got coverage results. I didn't run into any problems during testing. I guess the only 'edge' case is when a single line is only partially covered, which, because Emma instruments byte code rather than source code, sometimes happens unexpectedly. It handles this fine, though, and shows the relevant line as partially covered."

I reviewed Cobertura, and found that I could make the most of it after spending just one or two hours hours studying the documentation and the examples (though, of course, my familiarity with Ant probably helped).

Reports can be generated in HTML and also XML by both tools, though only EMMA provides plain text results. Both tools can be run from the command line, provide custom ant tasks, and are test framework agnostic, thus not favoring any of the frameworks with special integration. Both are free and have a strong community behind them, which is comforting if you run into problems while using them. Another thing to be noted is that the code must be compiled with the debug info for Cobertura to generate reports, while "EMMA does not require access to the source code and degrades gracefully with decreasing amount of debug information available in the input classes."

Next let's look at some real usage examples, based on TopCoder components.

Emma:

| Class | Method | Block | Line | ||||||

| Component | Version | Tested | Total | Tested | Total | Tested | Total | Tested | Total |

| Auditor | 1.0.0 | 8 | 8 | 24 | 41 | 272 | 974 | 76.9 | 239 |

| Bloom Filter | 1.1.0 | 9 | 10 | 68 | 78 | 1,624 | 1,912 | 356.0 | 417 |

| Configuration Manager | 2.1.5 | 15 | 15 | 144 | 145 | 4,654 | 5,328 | 1,080.2 | 1,226 |

| Data Validation | 1.0.0 | 41 | 43 | 207 | 220 | 2,606 | 2,941 | ||

| Event eMail Processor | 1.0.0 | 14 | 15 | 77 | 93 | 1246 | 2110 | 287.8 | 497 |

| POP3 Client | 1.0.0 | 27 | 27 | 125 | 132 | 2,537 | 3,078 | 664.9 | 779 |

| SAML Schema | 1.1.0 | 48 | 50 | 351 | 448 | 9,437 | 14,976 | 2,099.1 | 3,189 |

| Simple Cache | 2.0.0 | 17 | 17 | 86 | 96 | 2,482 | 3,025 | 648.2 | 790 |

| Unit Test Generator | 1.0.0 | 11 | 11 | 49 | 65 | 1,620 | 2,457 | ||

| XML 2 SQL | 2.0.0 | 11 | 11 | 47 | 77 | 853 | 2,641 | 226.3 | 612 |

| XSL Engine | 1.0.1 | 10 | 10 | 63 | 63 | 1,486 | 1,594 | 354.0 | 383 |

Cobertura:

| Class | Line | Branch | Complexity | |||||

| Component | Version | Tested | Total | Tested | Total | Tested | Total | |

| Auditor | 1.0.0 | 8 | 11 | 163 | 240 | 36 | 38 | 2.950 |

| Bloom Filter | 1.1.0 | 10 | 13 | 358 | 419 | 68 | 76 | 2.854 |

| Configuration Manager | 2.1.5 | 15 | 17 | 1,121 | 1,270 | 315 | 323 | 4.000 |

| Data Validation | 1.0.0 | 17(43) | 18(44) | 598 | 659 | 174 | 194 | 2.248 |

| Event eMail Processor | 1.0.0 | 13(15) | 17(19) | 308 | 505 | 42 | 60 | 2.212 |

| POP3 Client | 1.0.0 | 27 | 33 | 684 | 788 | 142 | 146 | 2.755 |

| SAML Schema | 1.1.0 | 49 | 51 | 2,752 | 3,264 | 605 | 663 | 3.462 |

| Simple Cache | 2.0.0 | 14(17) | 19(22) | 695 | 807 | 136 | 143 | 3.200 |

| Unit Test Generator | 1.0.0 | 7(11) | 8(12) | 309 | 463 | 55 | 84 | 3.509 |

| XML 2 SQL | 2.0.0 | 11 | 14 | 246 | 620 | 40 | 101 | 3.471 |

| XSL Engine | 1.0.1 | 10 | 11 | 358 | 388 | 78 | 78 | 3.734 |

As you can see here we cannot talk about a full side-by-side comparison, since the measures are different. Some small observation, though, about Cobertura can be made. It reports interfaces as classes with N/A test coverage (EMMA just ignores them), which can be a little bit confusing, especially at first. It can stumble on classes with only static methods and reports complex one-liners as fully covered even if they aren't.

EMMA is pretty fast, and the memory overhead is just a few hundred bytes. Cobertura isn't quite as speedy, and it occasionally incurred serious delays on the test runs (I noticed some tests that took a few seconds without Cobertura instrumenting the classes could take up to a few minutes with the instrumented classes).

4. NCover vs. TeamCoverage

For .NET C# component development TopCoder reviewed Peter Waldschmidt's NCover and Microsoft's TeamCoverage.

| TopCoder Expectations | NCover | TeamCoverage |

| .NET 2.0 support | Yes | Yes |

| .NET 1.1. support | Yes | Yes |

| C# support | Yes | Yes |

| Partial line coverage | Yes | Yes |

| Support for nant | Yes | No |

| Support for programming access to coverage reports. | Yes | Yes |

| Text reports. | No | No |

| Licensing and pricing issues. | GPL | Yes |

| Support for command line launch. | Yes | Yes |

| Ease of use | Yes | No |

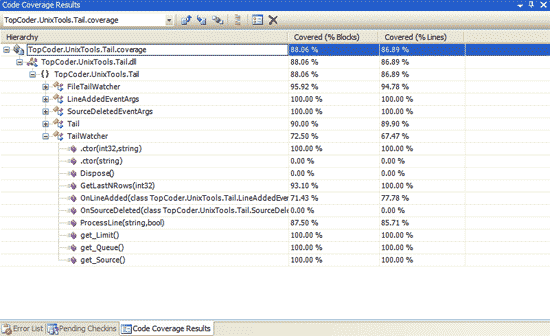

NCover uses method, block and line coverage measures to instrument the code, while TeamCoverage uses only branch and line measures.

Both tools have .NET 1.1, .NET 2.0 and C# support (though the latest NCover build is not working on 1.1 due to a bug, according to the NCover site).

NCover has support for Nant as an exec task. real_vg, who reviewed TeamCoverage, noted that "even used through

Unfortunately this isn't the end of that the issues with TeamCoverage. "The reports can only be viewed in VS or accessed programmatically," wrote real_vg. The tool "is only available as a part of MS VS Team Suite, which has a high price." In terms of ease of use, the tool is "not really intuitive, very limited. The results cannot be converted into human readable format without special effort (writing a tool). It is easy to use only when working in VS and using VS built-in functionalities for everything, it doesn't seems to be possible to combine VS GUI possibilities considering coverage with Nunit."

"Looks like a good solution only for those who already own the MS VS Team Suite and use it to do all jobs related to testing, i.e. testing is done using MS testing framework. Command line possibilities are marginal; to use the tool efficiently one needs to write his own programs which will access coverage data programmatically using the MS API."

Here's a sample of how TeamCoverage presents its results:

Here's a look at real usage examples, based on TopCoder components:

TeamCoverage

| Block | Line | ||||

| Component | Version | Tested | Total | Tested | Total |

| BCP Output Sink | 1.0.0 | 94.81% | 925 | 93.31% | 883 |

| Bloom Filter | 1.0.0 | 97.81% | 457 | 99.06% | 426 |

| Configuration Manager | 2.0.0 | 87.56% | 1,214 | 83.44% | 1,055 |

| Data Migration Manager | 1.0.0 | 92.25% | 1,316 | 92.40% | 1,288 |

| Date Picker Control | 1.0.0 | 80.81% | 271 | 85.29% | 305 |

| Date Utility | 2.1.0 | 90.04% | 1,847 | 87.25% | 1,717 |

| Distributed Simple Cache | 1.0.0 | 62.07% | 1,147 | 63.58% | 974 |

| Global Distance Calculator | 1.0.0 | 99.34% | 303 | 99.24% | 263 |

| Graph Generator | 1.0.0 | 95.50% | 333 | 97.00% | 298 |

| Hashing Utility | 1.0.0 | 97.42% | 233 | 96.67% | 239 |

| Image Manipulation | 1.0.0 | 90.73% | 755 | 91.71% | 865 |

| Instant Messaging Framework | 1.0.0 | 92.00% | 888 | 93.97% | 846 |

| Line Graph | 1.1.0 | 94.60% | 352 | 94.10% | 372 |

| Object Pool | 1.0.0 | 90.39% | 333 | 86.76% | 339 |

| PDF Form Control | 1.0.0 | 57.22% | 2,370 | 59.43% | 2,149 |

| Random String Generator | 1.0.0 | 100.00% | 67 | 100.00% | 87 |

| Reference Collection | 1.0.0 | 91.75% | 424 | 91.28% | 446 |

| Report Data | 1.0.0 | 91.59% | 690 | 91.72% | 478 |

| Rounding Factory | 1.0.0 | 98.04% | 663 | 97.91% | 621 |

| RSS Library | 1.0.0 | 87.46% | 3,956 | 86.92% | 3,628 |

| Serial Number Generator | 1.0.0 | 78.72% | 1,043 | 78.79% | 1,075 |

| Simple XSL Transformer | 1.0.0 | 91.64% | 299 | 85.59% | 339 |

| Stream Filter | 1.0.0 | 96.68% | 422 | 97.62% | 376 |

NCover:

| Class | Method | Block | Line | ||||||

| Component | Version | Tested | Total | Tested | Total | Tested | Total | Tested | Total |

| BCP Output Sink | 1.0.0 | 11 | 11 | 237 | 388 | 1,084 | 1,406 | 1,245 | 1,570 |

| Bloom Filter | 1.0.0 | 25 | 25 | 367 | 371 | 1,537 | 1,731 | 1,622 | 1,622 |

| Configuration Manager | 2.0.0 | 60 | 60 | 446 | 467 | 2,232 | 2,552 | 2,302 | 2,519 |

| Currency Factory | 1.0.0 | 30 | 30 | 101 | 291 | 330 | 456 | 374 | 495 |

| Data Migration Manager | 1.0.0 | 47 | 47 | 574 | 589 | 2,409 | 2,716 | 2,593 | 2,834 |

| Date Picker Control | 1.0.0 | 19 | 19 | 226 | 231 | 549 | 658 | 585 | 688 |

| Date Utility | 2.0.0 | 59 | 59 | 577 | 589 | 1,960 | 2,424 | 2,227 | 2,694 |

| Distributed Simple Cache | 1.0.0 | 36 | 36 | 114 | 224 | 278 | 657 | 282 | 599 |

| Global Distance Calculator | 1.0.0 | 29 | 29 | 264 | 269 | 792 | 885 | 1,125 | 1,170 |

| Graph Generator | 1.0.0 | 37 | 37 | 292 | 305 | 1,133 | 1,247 | 1,333 | 1,346 |

| Hashing Utility | 1.0.0 | 30 | 30 | 296 | 298 | 1,239 | 1,330 | 1,384 | 1,452 |

| Image Manipulation | 1.0.0 | 96 | 96 | 631 | 640 | 2,564 | 2,759 | 2,958 | 3,047 |

| Instant Messaging Framework | 1.0.0 | 74 | 74 | 678 | 686 | 2,844 | 3,086 | 2,874 | 3,062 |

| Line Graph | 1.1.0 | 41 | 41 | 461 | 466 | 1,935 | 2,104 | 2,126 | 2,245 |

| Magic Numbers | 1.0.1 | 41 | 41 | 433 | 435 | 1,787 | 2,009 | 1,887 | 2,043 |

| Object Pool | 1.0.0 | 18 | 18 | 98 | 174 | 481 | 548 | 498 | 541 |

| PDF Form Control | 1.0.0 | 78 | 78 | 544 | 558 | 2,746 | 3,125 | 2,868 | 3,128 |

| Random String Generator | 1.0.0 | 6 | 6 | 97 | 97 | 498 | 530 | 464 | 498 |

| Reference Collection | 1.0.0 | 44 | 44 | 391 | 400 | 2,150 | 2,252 | 2,118 | 2,301 |

| Report Data | 1.0.0 | 15 | 15 | 166 | 169 | 1,107 | 1,175 | 1,153 | 1,175 |

| Rounding Factory | 1.0.0 | 45 | 45 | 469 | 469 | 1,711 | 2,635 | 1,738 | 2,642 |

| RSS Library | 1.0.0 | 121 | 121 | 2,010 | 2,075 | 9,581 | 10,964 | 10,882 | 11,840 |

| Serial Number Generator | 1.0.0 | 76 | 76 | 692 | 713 | 2,765 | 2,966 | 3,052 | 3,112 |

| Simple XSL Transformer | 1.0.0 | 29 | 29 | 432 | 435 | 1,316 | 1,663 | 1,594 | 1,946 |

| Stream Filter | 1.0.0 | 36 | 36 | 333 | 335 | 1,410 | 1,527 | 1,421 | 1,467 |

I'm sure you have noticed the discrepancies between the two reports (blocks and lines seem to be really different for the tools), so I'll appeal to real_vg again to figure this out:

"TopCoder components have one problem with Team Coverage. The issue is that the tests in TopCoder components are usually built into the same assembly with the main classes, instead of using the separate assembly for main classes. The problem is that it is impossible to skip instrumentation and further coverage data gathering of the tests, as they are built into the same assembly. It is possible to modify the build file, but many of the components will need further modification - assembly internal methods will need to be changed to public, or the tests won't compile."

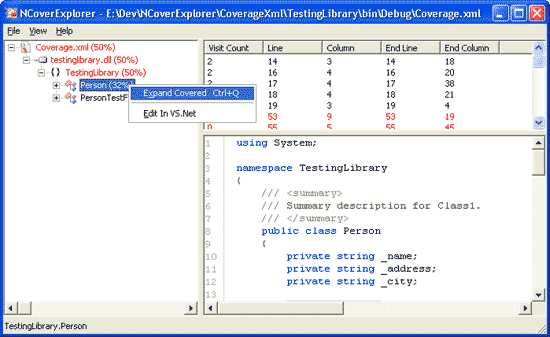

As far as NCover goes, reviewer fvillaf found that invoking it is easy, and the code only needed to be compiled with debug information. NCover has full support for partial line coverage, command line capabilities -- making it scriptable -- but the only output available is in XML (though fvillaf notes that XSL is provided, which can transform the XML into a nice HTML page). One note of caution: NCover requires that the target .dll of the code under test "must be previously compiled for the corresponding framework" (.NET 1.1 or .NET 2.0).

To help with the coverage output the community has developed NCoverExplorer which displays the reports in a Windows app. Here's a sample of what that looks like:

5. Conclusion

I hope that by reviewing these tools we have shed more light onto code coverage and helped you see why TopCoder embarked on such a challenge. In the end, TopCoder selected Cobertura and nCover as its default tools for code coverage and, starting today, component submissions will be required to have the coverage tools results embedded. To pass screening, TopCoder has set the threshold at 85% coverage from the unit test cases.

As I stated at the beginning of this article, I believe this is the right next step for TopCoder to take. As we all begin to grapple with the day-to-day realities of implementing code coverage, though, I encourage you to maintain your perspective: code coverage tools provide valuable insight into unit testing, but remember that they can be misused. It's easy to mistake a high coverage rate for code that is flawless, but that isn't necessarily the case.

Code coverage, particularly in combination with automated testing, can be a powerful tool. One of the best pieces of advice that I've found on the matter, though, was a reminder that no tool is as valuable as your own best judgment. "Code coverage analysis tools," wrote Brian Marick, "will only be helpful if they're used to enhance thought, not replace it."

References:

1) Introduction to code coverage by Lasse Koskela

2) Code coverage analysis by Steve Cornett

3) Measure test coverage with Cobertura by Elliotte Rusty Harold

4) In pursuit of code quality: Don't be fooled by the coverage report by Andrew Glover

5) How to misuse code coverage [PDF] by Brian Marick

6) www.ncover.org

7) emma.sourceforge.net

8) cobertura.sourceforge.net